Embedded EthiCS @ Harvard Bringing ethical reasoning into the computer science curriculum

We Value Your Feedback! Help us improve by sharing your thoughts in a brief survey. Your input makes a difference—thank you!

Introduction to Computer Science (CS 50) – Spring 2021

First time reviewing a module? Click here.

Click ⓘ to access marginalia information, such as reflections from the module designer, pedagogical decisions, and additional sources.

Click “Download full module write-up” to download a copy of this module and all marginalia information available.

Module Topic: Democracy and the Digital Public Sphere

Module Author: Meica Magnani and Susan Kennedy

Course Level: Introductory undergraduate

AY: 2020-2021

Course Description: “Introduction to the intellectual enterprises of computer science and the art of programming. This course teaches students how to think algorithmically and solve problems efficiently. Topics include abstraction, algorithms, data structures, encapsulation, resource management, security, software engineering, and web programming. Languages include C, Python, and SQL plus HTML, CSS, and JavaScript. Problem sets inspired by the arts, humanities, social sciences, and sciences. Course culminates in a final project. Designed for concentrators and non-concentrators alike, with or without prior programming experience. Two thirds of CS50 students have never taken CS before. Among the overarching goals of this course are to inspire students to explore unfamiliar waters, without fear of failure, create an intensive, shared experience, accessible to all students, and build community among students.” (Course description)

Semesters Taught: Spring 2021, Spring 2022

Tags

ⓘ

- democracy (phil)

- censorship (phil)

- free speech (phil)

Module Overview

In this module we first consider the rise of fake news, hate speech, and polarization on social media platforms. We then consider these phenomena in light of a democratic society. We explain both: (1) why these are considered threats to democracy; and, at the same time, (2) why democratic commitments should make one uneasy about censorship and speech regulation. To help clarify the concern, we explain the concept of democracy as a system of governance, the role that the public sphere plays in democratic decision-making, and outline five rights and opportunities that are essential for a flourishing democratic public sphere. This provides a helpful framing for the current debate over whether or not tech companies should regulate content with an eye to addressing fake news, hate speech, and polarization.

Students are then asked to consider particular design choices on social media platforms that have been made or could be made to address these issues (e.g. flagging fake news on Facebook, preventing retweeting of hate speech on Twitter, demonetizing extremist content on YouTube, etc). Using the five rights and opportunities introduced, they are asked to assess how and in what ways certain design features promote a flourishing democratic public sphere and how and in what ways they might hinder it (or even violate certain democratic commitments).

Connection to Course Technical Material

ⓘ

The topic of content regulation on social media platforms was chosen because of its timely connection to the 2020 presidential election, where ‘fake news’ and public discourse on social media platforms received widespread attention. Since this course is designed for CS concentrators and non- concentrators alike, the aim of the module is to provide students with the philosophical tools to reason through different design choices. In a more advanced class, one might ask students to not only imagine but also implement their own design choices.

In order to introduce the idea of responsible design practices, the CS faculty begins the class session by discussing notable ethical failures, including Mark Zuckerburg’s FaceMash. The Embedded EthiCS module follows this focus on responsible design practices by taking a closer look at content regulation on social media platforms. Students consider how, in their role as computer scientists, their design choices can promote or hinder certain (in this case, democratic) values..

Goals

Module Goals

- Familiarize students with the problems of fake news, hate speech, and polarization. Discuss these problems within the context of democracy.

- Help students see why social media platforms are of special concern from the standpoint of democracy (namely, it is on these platforms where people acquire information, share information, and discuss matters of political importance). Help students see the reasoning behind both sides of the current debate regarding whether or not social media platforms should regulate content in response to these problems.

- Familiarize students with 5 rights and opportunities that characterize a well- functioning democratic public sphere.

- Show students how social media platforms can promote or hinder democratic values and discourse through design choices.

- Give students practice applying the 5 rights and opportunities to determine which design choices best promote a democratic public sphere. Give them practice justifying their design choices by appealing to these rights and opportunities.

Key Philosophical Questions

ⓘ

Question 8 is the key philosophical question for this module, and Questions 1-3 provide students with the tools and concepts to answer it. Questions 4-6 help motivate the importance of content regulation on social media platforms, and Question 7 emphasizes how computer scientists may find themselves in a position that requires making responsible and informed design choices.

- What is a democracy?

- What role does the informal public sphere play in democratic decision-making?

- What are the rights and opportunities that characterize a flourishing informal public sphere?

- How has social media come to function as the informal public sphere?

- How does the structure of social media platforms impact the distribution of, and engagement with, news and information? What impact does this have on public discourse?

- What problems do fake news, hate speech, polarization pose to democracy?

- Do social media companies like Twitter, YouTube and Facebook have a responsibility to regulate content on their platform? Should they be involved at all?

- How might particular design features of social media platforms promote or hinder the 5 rights and opportunities that characterize a flourishing democratic public sphere?

Materials

Key Philosophical Concepts

ⓘ

The 5 rights and opportunities of a democratic public sphere (introduced in the Cohen & Fung reading) are useful tools of analysis that help students gain traction on what might otherwise be an abstract concept of democracy. Moreover, these tools add nuance to the concepts of free speech and censorship.

- Democracy

- Free Speech

- Censorship

- Fair Opportunity

- 5 Rights and Opportunities of a Democratic Public Sphere: Rights, Expression, Access, Diversity, Communicative Power

Assigned Readings

ⓘ

The Fung & Cohen excerpt provides an overview of 5 rights and opportunities required for a democratic public sphere. Since students will be utilizing this framework for the module activity, we recommend dedicating a portion of class time to reviewing this material.

In order to create explicit connections between the philosophical and technical content in this module, we recorded interviews with some leading experts in this field and assembled the footage into a 20 minute video. The content of the interviews included discussions about the relationship between social media and democratic values, mass media vs. digital media spheres, whether social media companies have a responsibility to regulate content on their platforms, and the benefits and pitfalls of particular design choices.

- Excerpt from Archon Fung and Joshua Cohen, “Democracy and the Digital Public Sphere” in Digital Technology and Democratic Theory (forthcoming 2021)

- Interview Video – Archon Fung, Thi Nguyen and Regina Rini (internal resource)

Implementation

Class Agenda

- Democracy and the Digital Public Sphere

- Introduce 5 rights and opportunities

- Class activity: Case analysis of Facebook, Twitter and YouTube

- Discussion: Which design choices promote a democratic public sphere?

Sample Class Activity

ⓘ

Each small group is given a different discussion prompt. This allows for a lively discussion with the whole class following the activity, as each student group can share the case they were asked to evaluate and their decision about which design choice is best to implement. Moreover, since every group is asked to justify their selection with reference to the 5 rights and opportunities for a well-functioning democracy, all students are in a position to critically reflect on their peers’ analysis of the case.

Students are placed into small groups for this activity and each group is provided with a Google Form detailing a unique case study to evaluate.

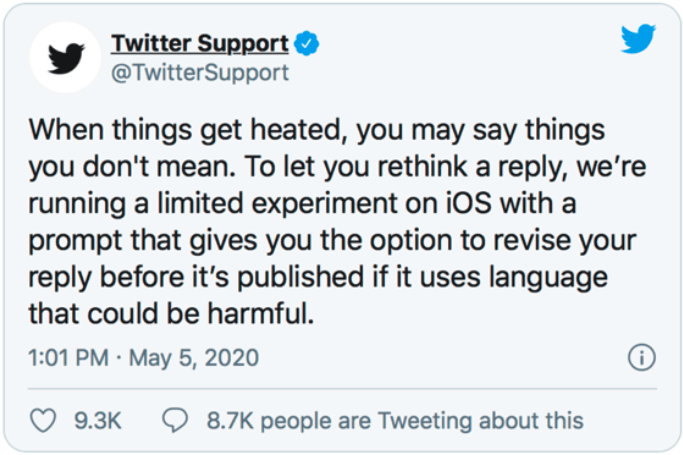

Proposal 1: Twitter uses an algorithm to detect offensive language and subsequently provides users with a ‘nudge’ to reconsider revising their content before publishing on the platform.

Proposal 2: However, a designer at Twitter believes that there needs to be a different approach to content regulation. Her proposal is that the platform should publicly flag content that the algorithm detects as ‘offensive’ but users choose to publish anyway. If users are found to repeatedly post offensive content, their tweets will be prevented from appearing on Twitter’s newsfeed (but would remain accessible by clicking on the individual user).

1) Which form of content regulation do you feel is best? (2) 2) Which proposed form of content regulation do you think best preserves the 5 rights and opportunities necessary for a democratic public sphere? (3) 3) How would you justify your answer with reference to the 5 rights and opportunities? Please be specific, e.g. “Proposal 1 best promotes ‘access’ because …” (Note: there may be multiple rights and opportunities that are relevant)

Module Assignment

ⓘ

Proposal 1 – This assignment follows the same format as the class activity students were asked to complete. This was beneficial insofar as students gained practice performing this kind of analysis before completing the graded assignment.

Proposal 2 – The goal was for students to articulate and justify their position with reference to the five rights and opportunities. We provided a grading rubric to the regular course TFs that detailed a variety of potential responses (e.g. it could be argued that this design choice promotes the opportunity for expression for reasons X and Y…, alternatively it could be argued that it conflicts with the opportunity for expression for reason Z…)

Proposal 1 Select circle icon with letter ‘i’ to read the marginalia for this paragraphⓘ:

Facebook relies on an algorithm as well as individual users’ reports to identify content that is potentially ‘fake news’. Once the content has been identified, it is sent to third party fact-checkers for verification. If the content is verified as fake news, it is publicly flagged with a warning that the content is disputed by fact-checkers.

Proposal 2 Select circle icon with letter ‘i’ to read the marginalia for this paragraphⓘ: However, a designer at Facebook believes that there needs to be a different approach to content regulation. Her proposal is that content deemed problematic by third party fact-checkers should be prevented from being shared on the platform altogether.

- Which form of content regulation do you feel is best?

- Which proposed form of content regulation do you think best preserves the 5 rights and opportunities necessary for a democratic public sphere?

- How would you justify your answer with reference to the 5 rights and opportunities? Please be specific, e.g. “Proposal 1 best promotes ‘access’ because …” (Note: there may be multiple rights and opportunities that are relevant)

Lessons Learned

ⓘ

In a different version of this module, one could focus on particular problems related to social media platforms. More specifically, the amplification and spread of misinformation and ‘fake news’ as well as the distortion of our social communities online (phenomena referred to as ‘filter bubbles’ and ‘echo chambers’). For assigned reading, we recommend pairing the these accessible, public philosophy pieces:

- Regina Rini, “How to Fix Fake News” (October 2018) New York Times – The Stone

- (recommended) Thi Nguyen, “Escape the Echo Chamber” (April 2018) Aeon

Given the large size of the class (>100 students), the discussion section and activity were led by the regular course TFs as opposed to the Embedded EthiCS fellows. For this reason, the Embedded EthiCS fellows hosted a training session for the TFs that included a run-through of the activity to gain familiarity with the philosophical concepts.

Except where otherwise noted, content on this site is licensed under a Creative Commons Attribution 4.0 International License.

Embedded EthiCS is a trademark of President and Fellows of Harvard College | Contact us